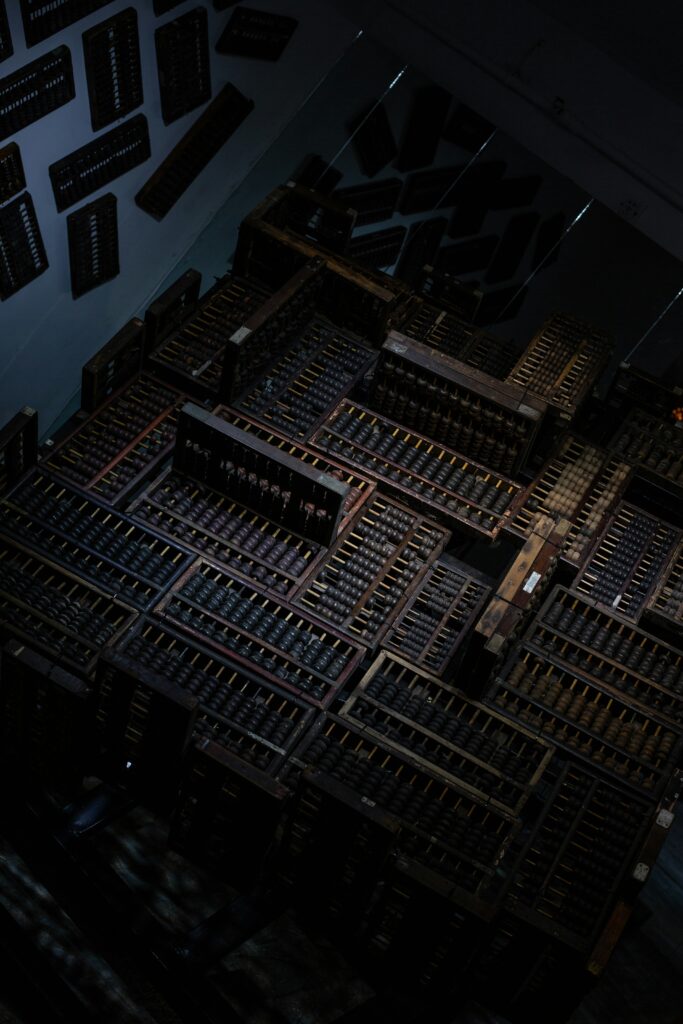

The abacus, which dates back to 3,000 BCE, is frequently cited as the earliest known computer device. To accomplish fundamental arithmetic computations, a set of rods or wires with beads were pushed back and forth. Then humanity moved to mechanical calculations in the 17th to 19th centuries. These devices used gears, wheels and other mechanical components to carry out calculations. In 1837 Charles Babbage invented the analytical engine, a mechanical computer that could execute a variety of calculations. It was never constructed during Babbage’s lifetime, but because it used punched cards for input and output, it is regarded as a forerunner to current computers. Herman Hollerith invented tabulating machines in the late 19th and early 20th centuries, which processed and analyzed data using punched cards. These devices were crucial to the advancement of modern computers and were employed for tasks like tabulating census data.

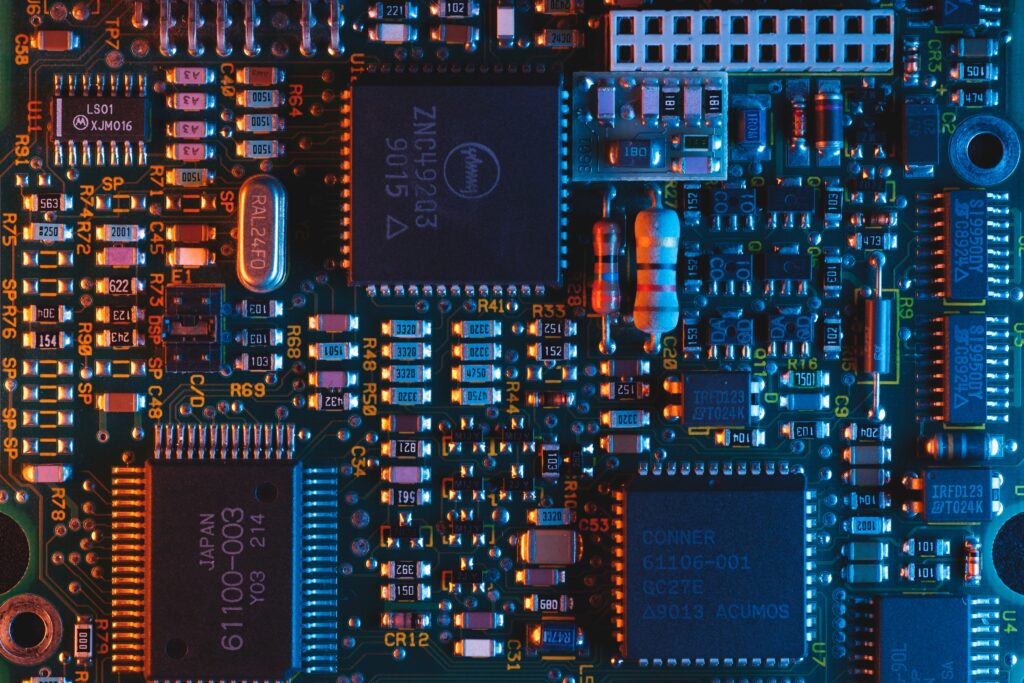

Vacuum tube computers, including the Atanasoff-Berry Computer (ABC) and the Electronic Numerical Integrator and Computer (ENIAC), signaled the transition from mechanical to electronic computing in the 1930s and 40s. Vacuum tubes made it possible for faster calculations and more advanced functionality. John Bardeen, Walter Brattain and William Shockley’s 1947 creation of the transistor at Bell Laboratories revolutionized computers. Smaller, quicker computers were created as a result of the replacement of cumbersome vacuum tubes by smaller, more dependable electrical components known as transistors. In 1958, Jack Kilby and Robert Noyce independently developed the integrated circuit, which allowed the integration of numerous transistors and other electrical components onto a single chip. This innovation cleared the path for the creation of miniaturized electronics and microprocessors.

The Altair 8800 and later computers like the Apple II and IBM PC helped popularize personal computing in the 1970s and 80s. These cheaper and more user-friendly computers made computing more accessible to both individuals and companies. With the advent of the internet and the growth of the World Wide Web, computing became a vast worldwide network of interconnected devices. Tim Berners-Lee created the HTTP, HTML and URL protocols to make simple information sharing and browsing possible. The emergence of smartphones and tablets, as well as advancements in wireless technology, helped facilitate the widespread use of mobile computing. Furthermore, the idea of cloud computing arose, offering scalable and on-demand access to computing resources via the internet. Quantum computing is a new technology that uses the laws of quantum mechanics to carry out calculations. Quantum computers use qubits, which can exist in superposition and entangled states, as opposed to classical computers, which use binary bits (0s and 1s). Though they are still in the early phases of research, viable quantum computers have the ability to handle difficult problems more quickly than classical computers.